Symbiosis

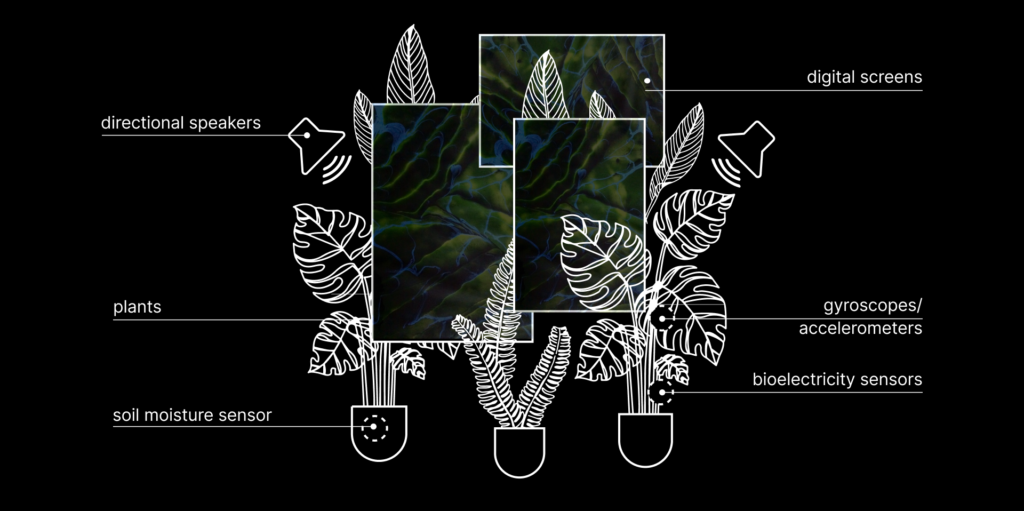

Symbiosis is an interactive installation where plants take the lead in creating art and sound. Using sensors that measure soil moisture and movement, the installation translates their natural signals into dynamic visuals and ambient music. Each plant shapes its own digital representation in real time, revealing its unseen rhythms and behaviours. The goal of this installation is to create an ecosystem where technology and living elements come together, forming a bridge between the digital and the organic. By working in harmony, they generate something beautiful, turning the plants into the true artists of this installation.

DSC03660

Symbiosis_2.1

Gallery

DSC03660

DSC03661

DSC03666

DSC03668

DSC03678

DSC03681

DSC03696

DSC03723

DSC03777

DSC03663

DSC03686

DSC03718

Concept

In Symbiosis, plants are not just passive organisms; they are active creators of their own digital and sonic expressions. Using sensors, the installation listens to their biological signals, translating them into dynamic visuals and evolving soundscapes.

The system captures two key data points: soil moisture – measures how much water the plant has, influencing colors and textures in the generated visuals and movement – detected by an accelerometer, shaping how fluid, distorted, or dynamic the images appear

These signals directly shape what we see and hear. The visuals react in real time, with movement causing soft distortions and moisture levels controlling color palettes and surface textures. At the same time, the combined sensor values create an ambient soundscape, allowing each plant to generate its own unique presence.

This installation blurs the line between nature and technology, turning plants into active participants in their own representation. Instead of being observed, they shape their own digital identity, making their hidden rhythms and behaviors visible and immersive. Symbiosis offers a new way to experience plants, not just as living organisms but as expressive, creative forces that interact with their environment in ways we can now see and hear.

Visitor Experience

Visitors are invited to engage with a living, multi-sensory ecosystem. As the plants express their vitality through evolving visuals and immersive sound, the installation offers a tangible reflection on the delicate balance between nature and technology. This interactive dialogue encourages a deeper appreciation for the creative power inherent in the natural world.

Research

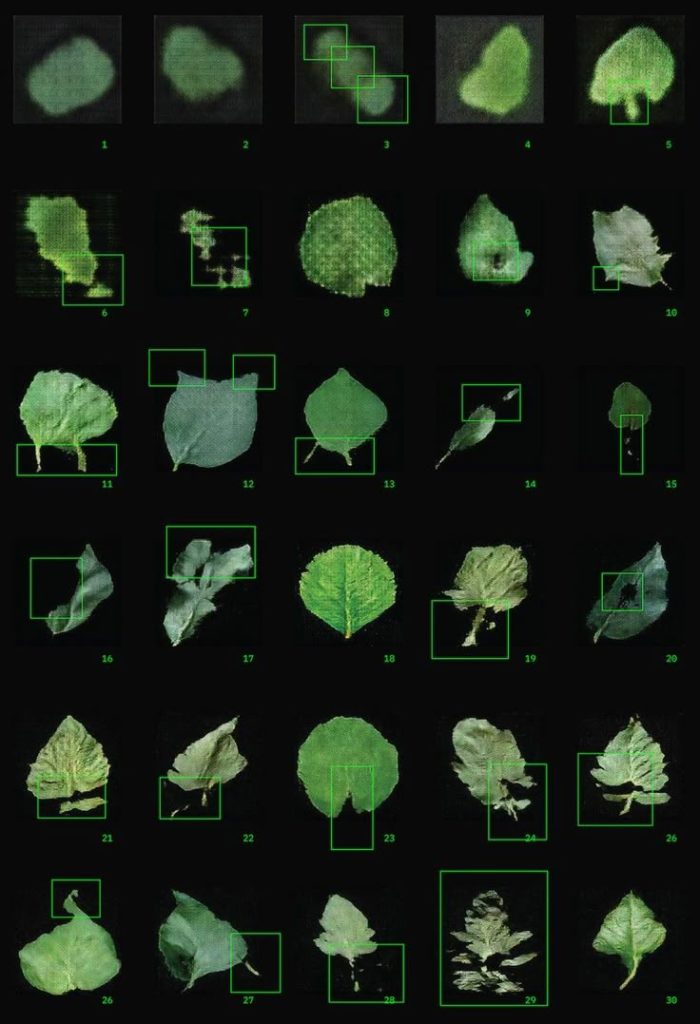

Our research into installations that blend technology with living plants led us to try different approaches, including using flowers and mushrooms. Each option, however, came with its own challenges. Flowers are stunning when they bloom, but their brief display makes them tricky for long-term projects. Mushrooms, while offering some fascinating electrical properties, require closed environments that can lead to mold and other issues, complicating their use.

In the end, these challenges confirmed that sticking with a variety of plants was the best choice. This approach not only takes advantage of the natural resilience of plants but also creates a clean, green, monochromatic aesthetic that works perfectly for our long-term installation.

Case Studies

01_NETWORKING-NATURE_700

Screenshot 2025-02-26 120218

SuperTree_EcoLogicStudio_©naaro_01-1600x1067

Studiomobile’s Networking Nature

Touch Grass by @baronlanteigne

EcoLogicStudio’s H.O.R.T.U.S.

Visual & Layout Inspiration

Our visual and structural inspiration came from a range of installations that explore the relationship between nature and digital space. We were particularly drawn to layouts where screens and plants intertwine, forming a seamless interaction between organic and technological elements.

The last example influenced the spatial layout of our installation. We were inspired by the use of metal frameworks to structure screens in a way that feels both architectural and organic. This guided our decision to use a modular approach, allowing for flexibility in positioning the displays while ensuring that plants could grow around and through the installation.

Layout inspiration

Layout inspiration

Layout inspiration

5c85326387594fa26246571b30f68626

2055d72b6f884a8b65bcab4beb109f32

bd6b302295644f6af90c9accfcc02a3e.jpg

Sketch & 3D Model

Based on our research, we created an initial sketch of the installation layout, mapping out how the screens, plants, and structural elements would come together. This sketch helped us refine our approach, ensuring a balanced composition where the plants and technology feel like they coexist naturally rather than competing for attention.

installation layout

3D mock-up of installation

The story behind the Archive

The Symbiosis Archive is a living record of the plants’ changing states, where every shift in moisture or movement is captured and transformed into visual and sound expressions using sensors that track how hydrated a plant is and whether its leaves are moving. Instead of just displaying numbers, the installation turns the data into something we can see and hear, making the plants’ invisible changes more tangible.

When a plant moves, the images react with soft distortions, blurred edges, or shifting light, almost as if the plant is affecting its digital reflection. Moisture levels shape the overall feel: a dry plant appears in desaturated, cracked textures, while a healthy plant produces smooth, rich tones. Too much water causes the visuals to glow with high saturation, soft mist-like textures, and shimmering light effects, mirroring the excess moisture.

At the core of this process is an AI layer that acts as a bridge between the organic and digital worlds. It doesn’t just take in raw sensor readings, it interprets them, adding layers of abstraction that make the images feel more alive. Movement makes the visuals pulse and flow, while hydration shifts the color balance and texture. The AI reads the nuances of each plant’s state and enhances them, allowing the plants to actively shape their own digital presence.

This interaction between nature and technology makes the plants more than just subjects, they become co-creators of the installation. Their biological rhythms are transformed into something we can experience in real time, creating a space where plants, AI, and human perception meet in a constantly evolving dialogue.

Image Generation

The visual style of the installation was carefully built, starting with a selection of images that set the mood and atmosphere. These reference images, taken from existing archives, helped us decide on the textures, lighting, and overall look we wanted to achieve.

We then used the REPLICATE platform, to generate images that directly respond to sensor data from the plants. The AI works with two key inputs. Movement data from the gyroscope affects how fluid and distorted the visuals are, creating shifting shapes and light effects. Soil moisture levels determine the colors and textures, making sure the images visually reflect how much water the plant has

This creates a real-time connection between plant and technology. When a plant is dry, the images look faded and rough, with cracked textures that resemble dried-out landscapes. When a plant is well-hydrated, the visuals become smooth and natural, with rich colors and soft textures that reflect balance. When a plant is overwatered, the images turn bright and saturated, with glowing highlights and mist-like effects

After generating the AI-driven images, we used Photoshop to refine them, making sure the sensor-driven changes were clear and expressive. This step helped adjust contrast, structure, and colors, making it easier to see how the plant’s condition affects the visuals. We fine-tuned the brightness and textures to create a balance between the natural plant forms and the AI-generated effects.

The final images exist in a space between natural and digital. The real shapes of the plants are still there, but the AI adds a new, dreamlike layer that makes them feel almost otherworldly. Leaves stretch and dissolve into flowing light, veins glow as if they’re pulsing with energy, and textures shift between realistic and abstract. The result is a unique mix of organic life and technology, where plants are not just growing but actively creating.

Generated Archive

Coding

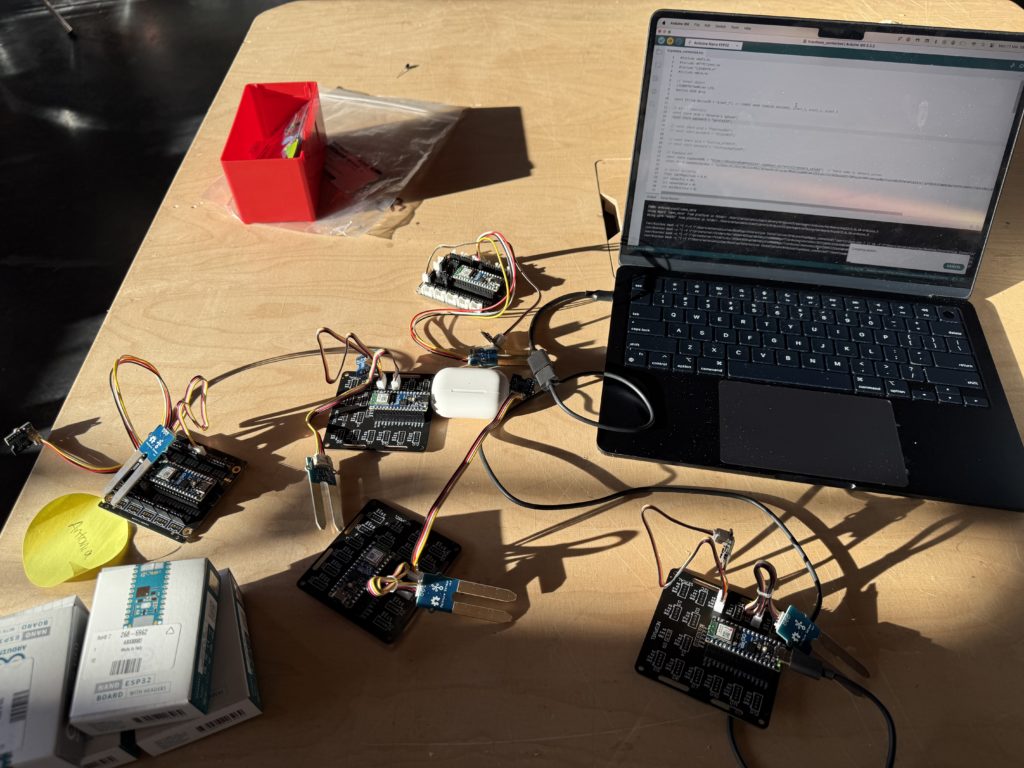

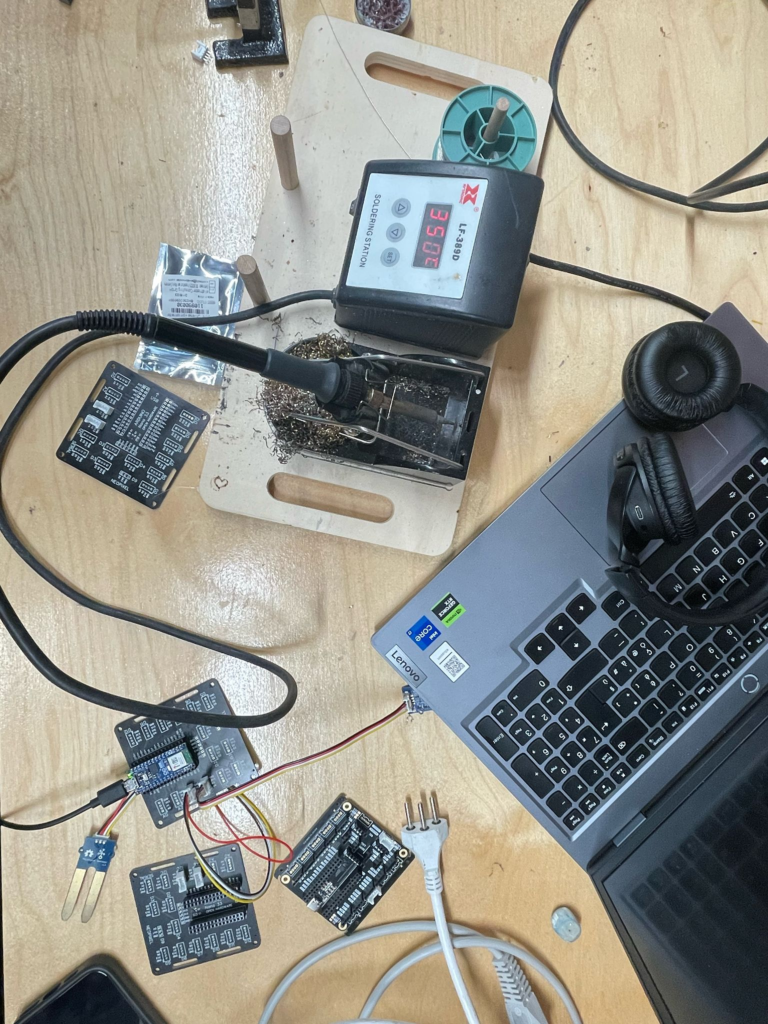

Sensor Data Collection with Arduino

Each plant in the installation is paired with an Arduino Nano ESP32, responsible for gathering real-time environmental data. The Arduino is connected to a soil moisture sensor, which detects the plant’s hydration levels, and an accelerometer, which registers movement. These values are processed locally and then transmitted via WiFi to Supabase, a cloud database where all sensor readings are stored. By assigning each Arduino a unique device ID, the system ensures that data remains correctly linked to the corresponding plant.

Since the exhibition takes place indoors, we introduced an additional external stimulus to simulate natural movement. A small fan is hidden between the plants, creating subtle airflow that influences the accelerometer readings. This controlled motion allows the plants to respond dynamically even in a closed space.

// Read soil moisture

sensorValue = analogRead(sensorPin);

soilMoisture = map(sensorValue, 0, 2400, 0, 2);

// Read accelerometer values

float accelX = LIS.getAccelerationX();

float accelY = LIS.getAccelerationY();

float accelZ = LIS.getAccelerationZ();

// Calculate movement

float magnitude = sqrt(accelX * accelX + accelY * accelY + accelZ * accelZ);

float changeMagnitude = abs(magnitude - lastMagnitude);

bool movementDetected = (changeMagnitude > 0.05);

lastMagnitude = magnitude;

// Send data to Supabase

sendToSupabase(soilMoisture, movementDetected);arduino datas

Testing sensors

IMG_0705

Data Storage & Retrieval with Supabase

Supabase functions as the project’s central data hub, managing the continuous flow of sensor information. The Arduino pushes JSON-formatted data to the database at regular intervals, capturing changes in soil moisture and plant movement. Meanwhile, the frontend system, running on a Raspberry Pi, continuously queries Supabase, retrieving the latest values and using them to drive both the visual and sound components of the installation. This connection allows each plant’s changing state to be reflected in real time.

void sendToSupabase(int moisture, bool movement) {

if (WiFi.status() != WL_CONNECTED) {

Serial.println("Skipping request, WiFi is disconnected.");

return;

}

HTTPClient http;

http.begin(supabaseURL);

http.addHeader("Content-Type", "application/json");

http.addHeader("apikey", supabaseApiKey);

http.addHeader("Authorization", "Bearer " + String(supabaseApiKey));

String jsonPayload =

"{\"device_id\": \"" + deviceID + "\", \"values\": {"

"\"moisture\": " + String(moisture) +

", \"movement\": " + (movement ? "true" : "false") + " } }";

int httpResponseCode = http.POST(jsonPayload);

Serial.println("Supabase Response: " + String(httpResponseCode));

http.end();

}Visual Display with JavaScript & HTML

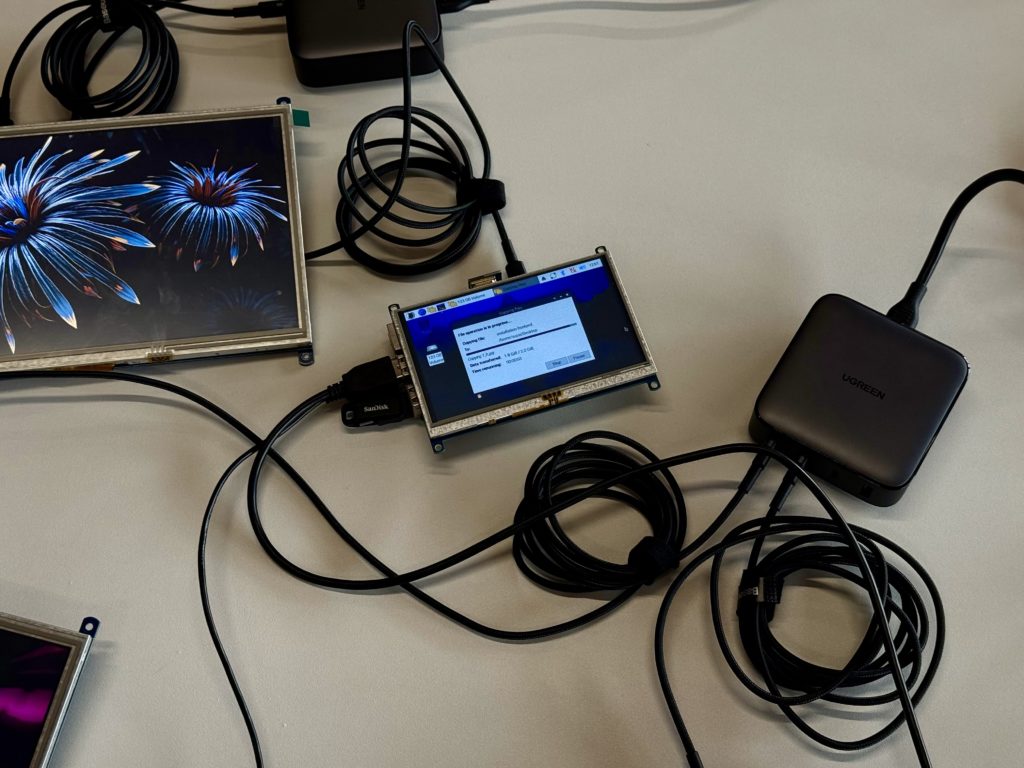

The Raspberry Pi acts as a dedicated display system, running a lightweight web-based interface built with HTML, CSS, and JavaScript. The interface dynamically selects images based on the retrieved sensor values, pulling from a structured archive of visuals that represent different moisture levels and movement states. To ensure a smooth transition between images, a fade-in/fade-out effect is applied, creating an organic visual response to the plant’s condition. This continuously evolving display transforms sensor data into a shifting visual representation of the plant’s environment.

async function fetchSensorData() {

let { data, error } = await db

.from("sensors_values")

.select("values")

.eq("device_id", DEVICE_ID)

.order("created_at", { ascending: false })

.limit(1);

if (error) throw error;

if (data.length > 0) {

const sensorData = data[0].values;

updateImage(sensorData.moisture, sensorData.movement);

sensorSoundData.moisture = sensorData.moisture;

sensorSoundData.movement = sensorData.movement;

}

}

IMG_0716.HEIC

IMG_0841 2

IMG_0843

IMG_0850

Sound Synthesis with Web Audio API & Bluetooth Speaker

Alongside the visuals, the installation generates a dynamic soundscape using the Web Audio API. Instead of playing pre-recorded tracks, the system synthesizes sounds in real time, directly influenced by the plant’s sensor readings. Moisture levels define pitch variations, while movement alters modulation depth and frequency, introducing shifts in texture and rhythm. A combination of oscillators, filters, reverb, and delay effects helps shape the sound, creating an immersive sonic environment that evolves alongside the plant.

To anchor the auditory experience within the space, only one Raspberry Pi is connected to a Bluetooth speaker, and it generates sound based on the data from a single selected plant. This approach creates a focal point for the sonic output, allowing one plant’s activity to be amplified while the others remain visually represented through the screens.

function TuneGenerator(context) {

const notesGenerator = new NotesGenerator(context);

setInterval(() => {

notesGenerator.playNote(sensorSoundData.moisture, sensorSoundData.movement);

}, 1200);

}

function NotesGenerator(context) {

const scaleMapping = {

0: [220, 233, 246, 261, 277], // Dry: Lower frequencies

1: [330, 349, 370, 392, 415], // Good: Mid-range frequencies

2: [392, 415, 440, 466, 494] // Wet: Higher frequencies

};

this.playNote = function (moisture, movement) {

const notes = scaleMapping[moisture] || scaleMapping[1];

const freq = notes[Math.floor(Math.random() * notes.length)];

const carrier = context.createOscillator();

const modulator = context.createOscillator();

const gainNode = context.createGain();

const filter = context.createBiquadFilter();

carrier.type = "sine";

modulator.type = "sine";

modulator.frequency.value = movement ? 2.0 : 0.5;

gainNode.gain.value = 0.6;

carrier.frequency.setValueAtTime(freq, context.currentTime);

filter.type = "lowpass";

filter.frequency.setValueAtTime(freq * 1.5, context.currentTime);

modulator.connect(carrier.frequency);

carrier.connect(filter);

filter.connect(gainNode);

gainNode.connect(context.destination);

modulator.start();

carrier.start();

carrier.stop(context.currentTime + 4);

modulator.stop(context.currentTime + 4);

};

}sound generation

Physical Construction

The way we built the installation was just as important as the technology behind it. We wanted the connection between living plants and digital displays to feel real and immersive, not just symbolic. To make this happen, we designed a structure using metal pipes, chosen for their industrial look, which hold both the screens and the plants. The screens are placed so they blend into the greenery, making it clear that nature and technology are constantly interacting.

We spent time choosing different types of plants, not just for how they looked but also for their different soil moisture needs. Some plants thrive in drier conditions, while others need more water, and we wanted to reflect that diversity. We also picked a mix of small and large leaves, tall and short plants, making sure the setup had depth and contrast. This variety made the installation feel more like a real, evolving ecosystem rather than a fixed arrangement.

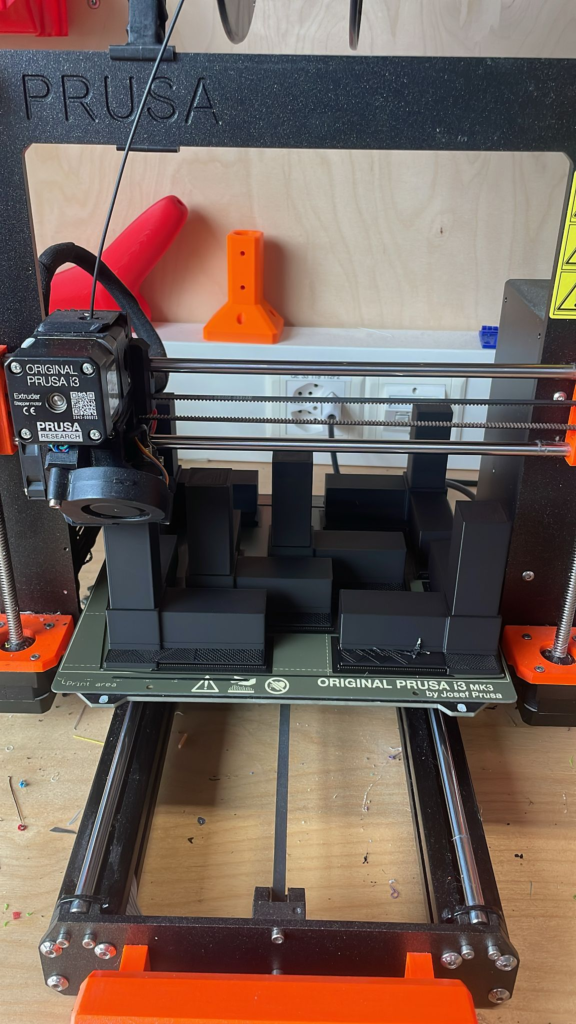

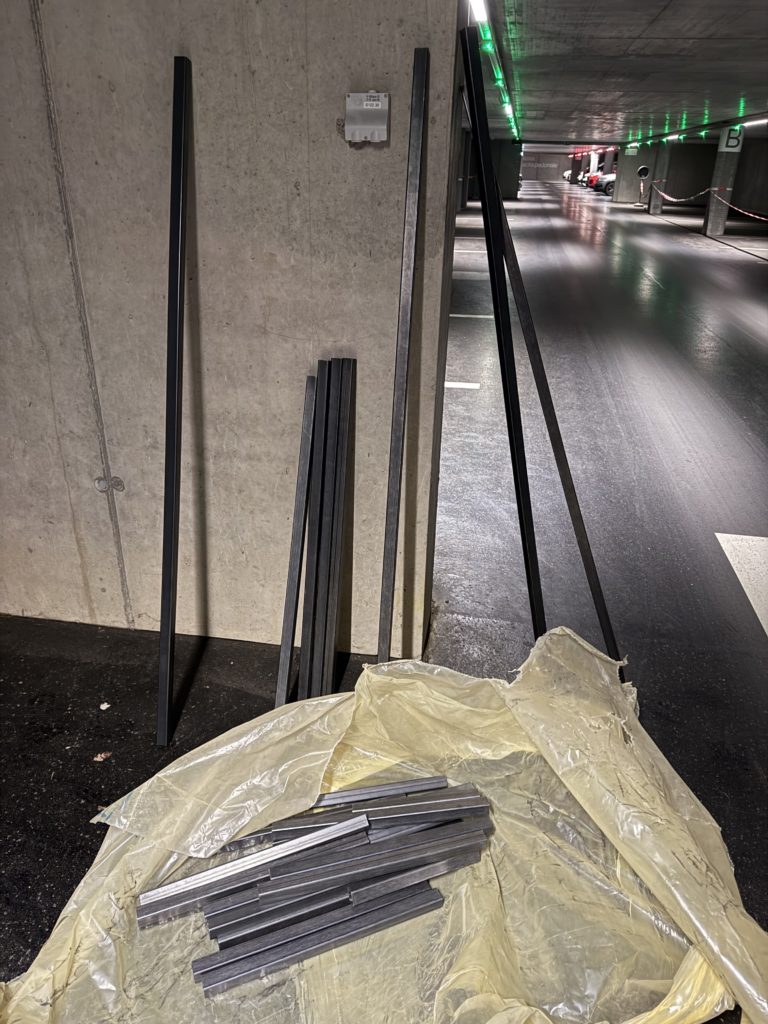

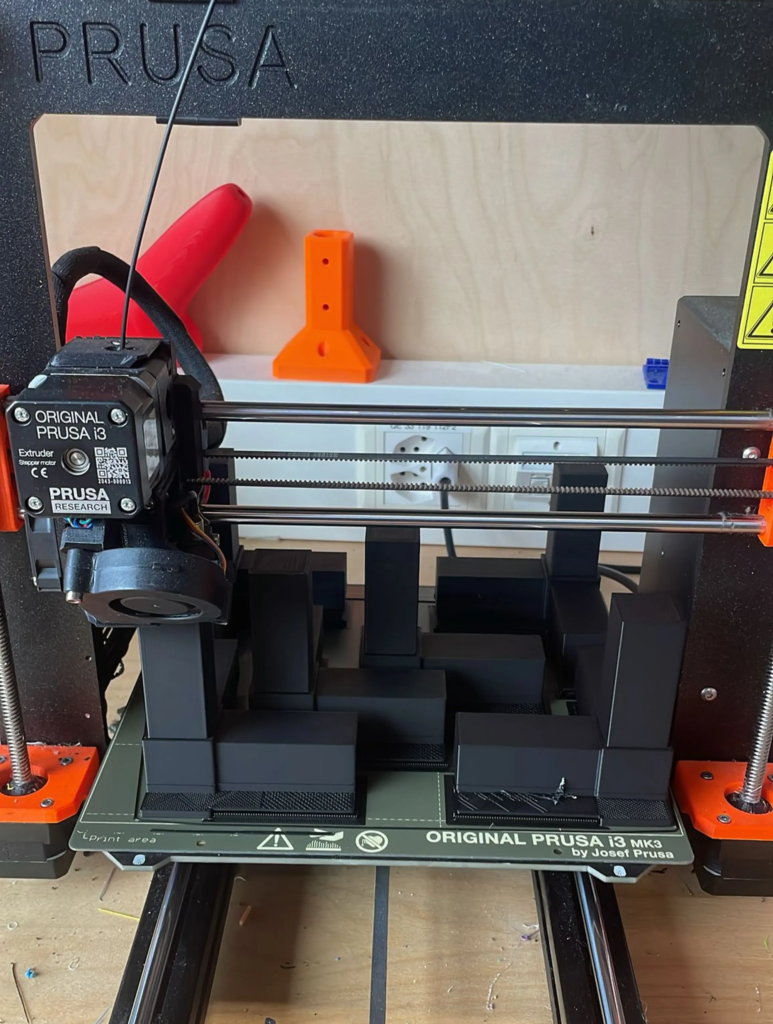

To build the framework, we cut metal pipes to the right size and used custom 3D-printed joints to hold everything together. One of the biggest challenges was moving the installatio, carrying heavy metal parts and delicate plants wasn’t easy. But instead of seeing it as a problem, it became part of what made this project exciting. It pushed us beyond just working on a computer or using minimal fabrication. In the end, building something that felt real, physical, and alive made the whole process even more rewarding.

Plant transportation

3D printing the joints

structure building

Setting-up installation

Cutting the metal pipes

Buying the metal pipes

IMG_0569

exhausted but happy

Plant transportation

soldering

first assembly test

Immagine WhatsApp 2025-03-17 ore 14.42.02_4287aaa9

structure assembly

Space & Set-up

The exhibition took place in Saceba, the old cement factory near Mendrisio. The place offered an ideal background for our installation, thanks to its open floor plan and raw, industrial character. We positioned our structure near the large windows to make the most of the natural light, which softened the otherwise rough concrete surroundings. The plants and framework created a striking contrast with the factory’s rough surfaces, highlighting the theme of symbiosis by blending organic elements with the building’s industrial character.

IMG_0919

Saceba outside

Saceba outside

installation set-up

installation set-up for documentation

exhibition

IMG_0950.HEIC

IMG_0958

Immagine WhatsApp 2025-03-25 ore 14.04.45_a99134be