News to image

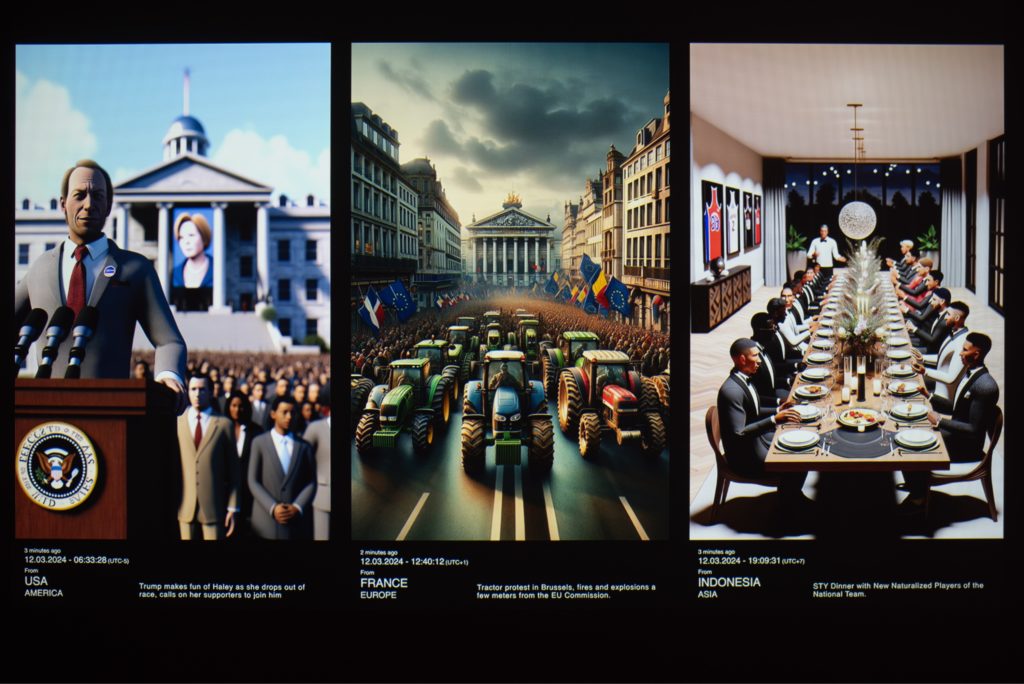

News to Image is the simulation of a thought pattern that is exposed in real time to headlines of breaking news and generates a representative image of its understanding of the topic. The intention is to replicate the natural process that occurs within the human mind when it receives a headline and generates an initial summary before going deeper into the topic. By replicating this process on three different screens, each dedicated to a different continent (America, Europe, and Asia), it also creates a comparison of the diverse media communication, demonstrating how the selection of different themes and the way they are presented influences comprehension.

Img 01

News to image.

Images

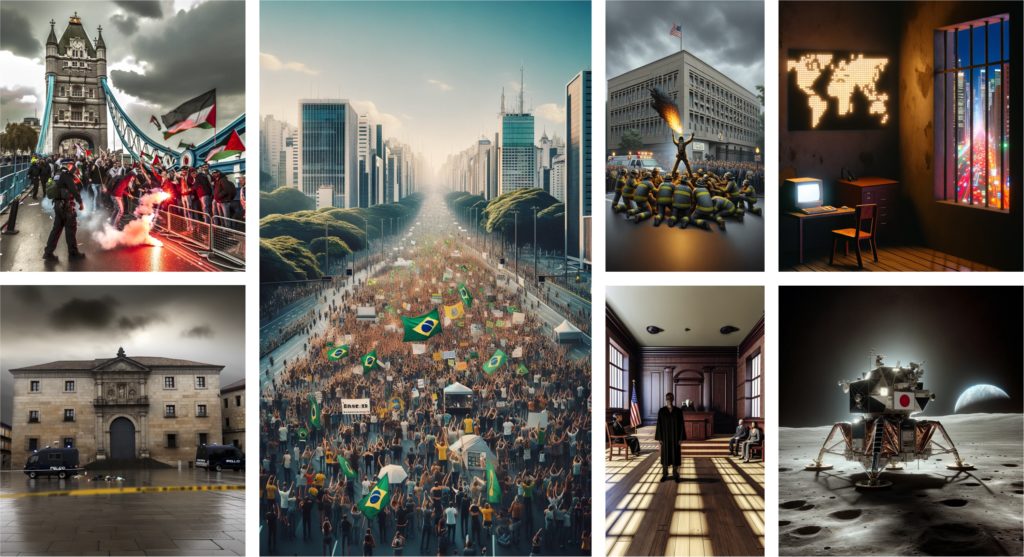

Img 02

Front view installation.

Img 03

Installation detail on animation.

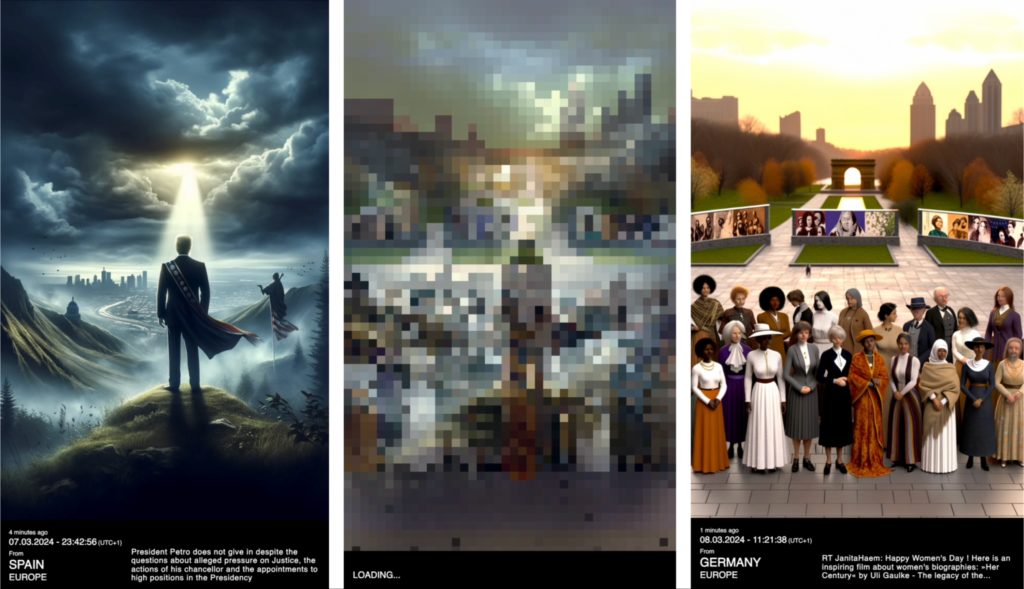

Img 04

Generated image archive.

Img 05

Image archive, zoom on news.

Video

Video 01

News to image video presentation.

Concept

Real time news

Every day, when we scroll through news headlines, our minds instantly paint a picture of what we expect the story to be about. This mental image is shaped by what we already know about the topic and how the headline describes it to us. Whether we’re familiar with the subject or not, our mental picture can be detailed or simple, depending on our background knowledge. This shows how our understanding of news is influenced not only by the content itself, but also by our own interpretations and experiences.

Media communication

The way news is interpreted is heavily influenced by the variety of content available and how it’s presented. For this reason the differences of interpretation are even more clear when we compare the media communication of different geographical areas, such as America, Europe, and Asia. By analyzing which news stories are considered significant and how they’re conveyed, we gain insight into the distinct priorities and goals of each of these systems. Above all, we notice the difference in communication. These three areas have been selected because they can provide a more complete picture given their evident media power. Starting from this, the same process could be applied to each of the individual seven continents, to explore and visualize even more deeply the differences among them.

Thought pattern through AI

This process can be replicated through artificial intelligence, which, being developed by humans, inherently carries biases and operates with a finite dataset. In this context, AI serves as a model of human thought processes. When exposed to a news headline for the first time, the AI goes through a similar process. It generates an image that encapsulates its understanding on the topic, based on the data it has been trained with.

Img 06

Outcomes created with AI.

In person installation

The exhibition involves three screens that alternately present new images generated by artificial intelligence based on real-time news headlines from X. At the moment of generating these images, an emotionless voice reads out the news headline, capturing the user’s attention during the transition and emphasizing the beginning of the automatic generation process. Each screen updates periodically, and the three screens are staggered in time to allow the user to focus on each continent at different times. The entire experience can also be adapted for use with a single projector that projects three different frames, one for each continent.

Img 07

Screens option.

Img 08

Projector option.

Choosing the technology

The installation is realized through the integration of various technologies, each selected for a different purpose. Before beginning the implementation of these tools, work was done to figure out the best technology for each goal. The news is sourced directly from X, a social media platform widely used as a method of information, continuously updated in real-time and primarily based on text-based posts. Image generation uses DALL-E 3, a powerful artificial intelligence capable of generating images based on simple textual prompts. Supabase database was used to store the generated images and related information. Microsoft Translate API was used to translate each prompt into English and, for their reading, ElevenLabs was chosen, which, in addition to offering a wide range of voices, also allows for the customization of the desired tone through parameters.

Client - Server

The entire experience is based on a client-server communication system, repeated for each continent. Once initiated, the server calls the start() function, which checks whether the last image loaded in the Supabase archive has already been displayed on the screen. If it has been shown before, then the getTweet() function is invoked to retrieve the latest post made on the X platform, specifically from the list of posts related to the continent of interest. Once the text, serving as a prompt, is obtained, it is translated into English and processed by ChatGPT to tailor it into the perfect prompt for DALL-E 3, which generates the image that is then displayed on the screen. Once the photo is ready, it is uploaded to the Supabase table waiting to be used.

The function checks that in the "America" table of Supabase, the last uploaded image, identified by its ID, has been marked as "used" through a boolean variable before fetching a new tweet.

//start the server

async function start() {

console.log("--------START THE PROCESS--------");

const { data, error } = await supabase

.from("america")

.select("*")

.order("id", { ascending: false })

.limit(1)

.single();

if (error) {

console.error("Error fetching the last uploaded image URL:", error);

return null;

}

if (data.used) {

getTweet();

} else {

setTimeout(() => {

start();

}, 1000);

}

}

start();On the client side, the code is periodically updated to check if a new image is available to be displayed. If so, the photo is downloaded from the Supabase table, along with the necessary information to fill in the caption. At this point, a pixel animation allows for the transition between the previous photo and the newly downloaded one. Meanwhile, the prompt, which doubles as the news headline, is read aloud by an ElevenLabs voice.

Server

X (Twitter)

The posts fetched from X are downloaded in real-time through an API that allows periodic checking of the latest posts from a curated list. These lists consist of collections of various profiles, so following a list is like accessing a tailored homepage. For each continent, a specific list has been created, which follows around twenty different news profiles from the various states that constitute the respective continent. Once the latest headline is downloaded, its metadata is saved to later complete the caption. Each X profile is structured as an object within a JSON, allowing for retrieval of its respective state and time zone, to enable the display of the publication location and local time of the news.

In the example provided, the profile with the account ID "BBCNorthAmerica" has a corresponding time zone of UTC-5 and is located in the USA.

{

"accounts":[

{

"id": "BBCNorthAmerica",

"timezone": "-5",

"state": "USA"

},

...

}To refine the prompt so that it can be correctly read by ElevenLabs and utilized by ChatGPT, it undergoes a check where all special characters such as “#”, “@” or URL links are removed, leaving only the text.

Function to remove "#", "@" or URL.

function removeUrl(str) {

const regex = /http\S*\s?|[#@]/g;

return str.replace(regex, "");

}Translator

The post is translated using the Microsoft Translator API so that the prompt is returned in English regardless of the original language. This enables it to be displayed on the screen as the image description. This process is necessary because the state of origin of the posts vary, as do the languages and their respective alphabets.

DALL-E 3

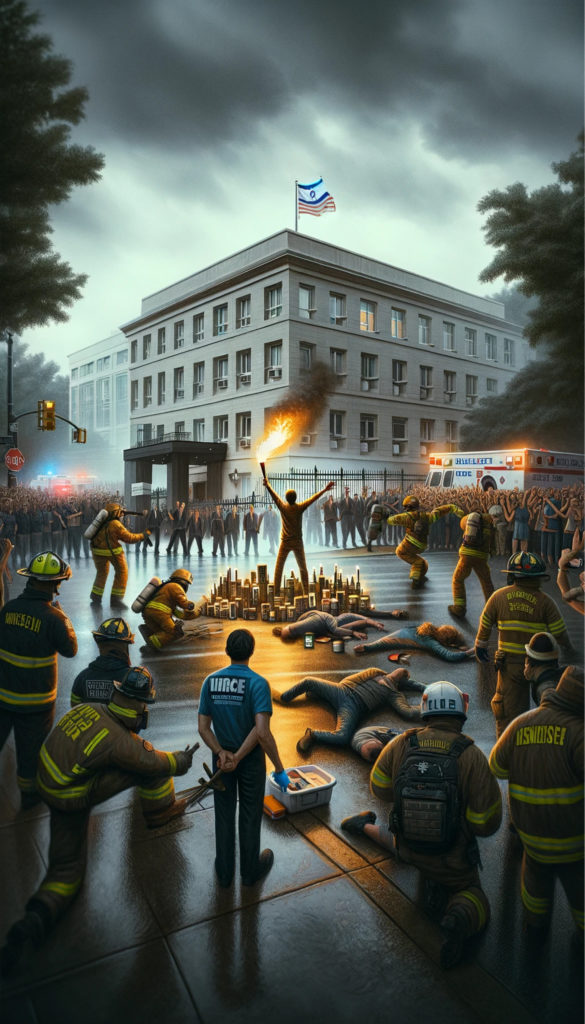

The image generation process required a lot of refinement of the prompt. Initial attempts to verify if the posts could be used as prompts were made in a ChatGPT chat, which led to good results in a short time as the chat can be trained with the characteristics of the desired outcome and remembers them. However, the first issues encountered were policy and censorship problems, but with some adjustments to the prompt, it was possible to achieve initial good results.

By just adding “Create an image that has a realistic style starting from the following prompt. If there are public figures or topics that need to be censured, fix them by getting as close as possible to the topic and by achieving a resemblance with the original images. If the topic it’s too explicit or graphic make changes in order to adapt it to your filters. Don’t distance the image too much from the starting topic, stay close to the prompt. Make it in a 9:16 ratio.” before each post the images started to give the first well developed outcomes.

Img 09

Original post: “Man sets himself on fire outside Israeli embassy in Washington DC”.

Once the DALL-E 3 API was implemented, using the same prompt, the results obtained were less photorealistic and often featured more sketch-like images and low-quality collages. This is because the API does not internally contain a history and thus improvement of the prompt for generation. Additionally, DALL-E requires a very detailed description of the desired result in order to create the desired image.

Img 10

Prompt: “Breaking news: Palestinian Authority Prime Minister Mohammed Shtayyeh has offered the resignation of the government ahead of a cabinet meeting on Monday. Follow today's live coverage.”

To overcome this issue, the image generation process is preceded by a call to the ChatGPT API, which rewrites the prompt starting from the text of the post, making it as comprehensive as possible and aligning it with OpenAI’s policy, thereby enabling it to be given to DALL-E 3 as a prompt and obtaining the desired results.

Img 11

Prompt: “Breaking news: Palestinian Authority Prime Minister Mohammed Shtayyeh has offered the resignation of the government ahead of a cabinet meeting on Monday. Follow today's live coverage.”

The function creates the final prompt for DALL-E that is the passed to the image_generate() function in order to actually create the image.

//rewrite the prompt to fit the policy and DALL-E requirements

async function promptGeneration(instruction) {

const completion = await openai.chat.completions.create({

messages: [

{

role: "user",

content: `Rewrite the following text, making it a perfect prompt for DALL-E 3 to create a vertical oriented image, adding all the details so that the scene described by the text is as well-described as possible. Reach a maximum of 2000 characters without ever creating a new paragraph. Adapt the final prompt so as not to violate your policy. Do not add quotes within the text. Specify that the image should be a photo, avoiding collages and illustrations: ${instruction}`,

},

],

model: "gpt-4-turbo-preview",

temperature: 0,

top_p: 0,

});

const description = instruction;

instruction = completion.choices[0].message.content;

image_generate(instruction, description);

}The quality of the generated images is also closely linked to the source of the news. After numerous attempts and various prompts, it’s evident that the AI’s dataset has less information regarding Asian countries. Consequently, this leads to the generation of less realistic and comprehensive images for news originating from Asian countries.

Img 12

From left to right: America, Europe, Asia.

Within the code, several checks have been implemented to ensure that the image generation actually occurs and that everything works smoothly without being blocked by any policies. If the image generation fails or encounters any issues, after two attempts, the getTweet() function is called again, and a new piece of news is utilized for the next image. This ensures a continuous flow of updated content and prevents disruptions in the display process.

The entire installation utilizes OpenAI’s API calls for both prompt creation and image generation. However, the DALL-E function could potentially be replaced by tools like Stable Diffusion, which operate locally and requires no costs for image generation calls. Moreover, they are subjected to less censorship, allowing for the creation of more realistic and explicit images.

Supabase

Once the image is ready, its reference link and respective metadata (date, time, country, etc.) are uploaded to a SupaBase table. SupaBase is a database that allows real-time uploading and downloading of content, organising them into tables similar to Excel spreadsheets. A dedicated table has been created for each continent, enabling the identification of the image’s origin zone. The new image is then assigned a numerical ID, similar to the most recent news generated, and the server waits until this image is used and its “used” variable is marked as TRUE. At that point, the code cycle restarts, fetching a new post.

Img 13

Supabase America’s table structure.

Client

Supabase

On the client side, the data uploaded to Supabase is periodically checked to verify if the latest uploaded image has already been displayed on the screen. If it hasn’t been displayed yet, it is downloaded along with the accompanying metadata necessary to create the photo caption. The images are downloaded as objects containing the reference link to the image, as well as other data saved in the table such as “time_zone”.

Function called to fetch the last image not used on the database.

//get the last uploaded image as an object

async function fetchLastUploadedImage() {

const { data, error } = await supabase

.from("america")

.select("*")

.order("id", { ascending: false })

.limit(1)

.single();

if (error) {

console.error("Error fetching the last uploaded image URL:", error);

return null;

}

return data;

}Transition

To achieve the pixel transition from one image to another, it was chosen to utilize methods within an HTML canvas rather than implementing the animation in p5.js. Within the canvas, the necessary images for the transition are loaded. At the beginning of the animation, the colors of the first image are sampled, which are then used to fill the canvas with squares of their respective colors. This process occurs gradually, reducing the number of squares and the resolution of the image progressively. Meanwhile, the opacity of the next image to be displayed begins to increase, overlaying the old image and altering the result of the color sampling: the transition image becomes a morphing of the two images represented in pixels. Once the minimum resolution point is reached, the number of squares begins to increase again, and the opacity of the second image reaches its maximum, completing the transition.

Img 14

Main steps of the transition starting from the first image, then the middle point and reaching the second image.

Video 02

Screens animation.

The transition function is called at the end of loading the next image, and simultaneously, the caption editing function as well as the prompt reading function are called.

Piece of code that calls all the elements needed for the animation.

//functoin called once the image is loaded

nextImage.onload = () => {

emptyCartillo();

textToSpeech(obj.description);

pixelTransition(prevoiusImage, nextImage).then(() => {

prevoiusImage = nextImage;

nextImage = new Image();

nextImage.crossOrigin = "Anonymous";

editCartillo(obj);

updateState(obj);

});

};Once the animation has occurred, the usage status of the image, saved in the “used” variable on Supabase, is updated with the function updateState() so that the server knows it needs to generate a new image.

Function that gets the different colors of the image in the required positions and color a square with said color.

//creation of the pixels

function pixelate(divisionNumber, image, alpha = 1) {

context.globalAlpha = alpha;

let chunk = imageWidth / divisionNumber;

context.drawImage(image, 0, 0, imageWidth, imageHeight);

context.globalAlpha = 1;

let imageData = context.getImageData(0, 0, canvas.width, canvas.height).data;

for (let y = chunk / 2; y <= canvas.height; y += chunk) {

for (let x = chunk / 2; x <= canvas.width; x += chunk) {

const pixelIndexPosition = (Math.floor(x) + Math.floor(y) * canvas.width) * 4;

context.fillStyle = `rgba(${imageData[pixelIndexPosition]},${imageData[pixelIndexPosition + 1]},${imageData[pixelIndexPosition + 2]},${imageData[pixelIndexPosition + 3] * alpha})`;

context.fillRect(x - chunk / 2, y - chunk / 2, chunk + 1, chunk + 1);

}

}

return true;

}ElevenLabs

To capture the user’s attention and emphasize the moment of image generation and real-time news reception, an ElevenLabs voice reads out loud the news headline, which acts as a prompt. The ElevenLabs API allows the use of various voices available in the catalog, which can then be customized using different parameters. In order to achieve an emotionless voice, the stability of the voice was increased and the intonation reduced. The objective was to emulate a detached voice associated with an automated process.

Audio 01

ElevenLabs generated audio.

However, the returned text is not immediately provided as an MP3 audio file. Instead, a blob file is received, which then needs to be linked to its URL in order to be downloaded as audio and played back.

Conversion from the blob to the audio.

//Part of the function that gets back the audio

try {

const response = await fetch(`https://api.elevenlabs.io/v1/text-to-speech/${voiceId}/stream`, {

method: "POST",

headers: headers,

body: body,

});

...

const blob = await response.blob();

const url = window.URL.createObjectURL(blob);

const audio = new Audio(url);

audio.play();

}The online installation (archive)

The installation is the place where images are generated and where people can witness the act of imagination of artificial intelligence. Every image generated by this installation is not lost; rather, it is used to create a digital archive that collects every creation of the machine, generating a summary of the day, week and month according to AI in the form of an image gallery.

Through a QR code, users can access an online archive containing all the images generated during the exhibition along with corresponding information. This allows the images to always be accessible, both within and beyond the exhibition space. It enables anyone to view them and have an archive summarizing the media events of the month interpreted by an AI.

Video 03

Video presentation of the digital archive.

UX and UI

Video 04

Inspiration for the navigation of the archive.

The archive is designed to emulate the navigation system of a smartphone gallery. Once the QR code is scanned, the user accesses a sequence of all the images created since the beginning of the exhibition, divided by day and by continent. To switch between continents, the user simply needs to press the button corresponding to the desired continent’s name, similar to navigating between the three screens. If more information is desired about an image, the user can zoom in on the desired photo, which opens in preview mode with its accompanying information.

Img 15

User interface of the digital archive.

The application operates through continuous calls to Supabase, where all the images uploaded to the database and their respective data are downloaded.