Echoes of Exclusion

Echoes of Exclusion is an interactive installation aiming to put a spotlight on the biases and discrimination seen in AI-generated imagery.

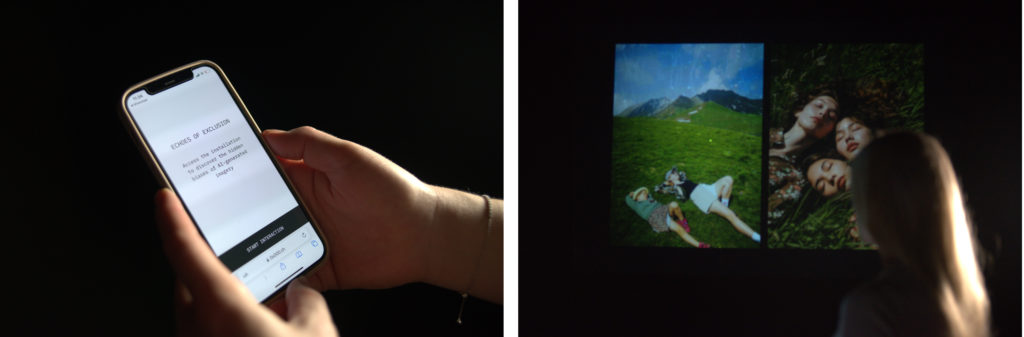

We created Echoes of Exclusion as part of the course Prototyping Interactive Installations within the Master in Interaction Design at SUPSI. Through the installation, people can select a photo from a collection of 50 that they would like to see generated into an AI image. When a photo is selected an AI-generated voice reads out the description of the image as well. The installation is therefore an excellent conversation starter around the different biases that can be spotted.

The installation was developed for Exposed Photography’s photo festival in Turin, Italy, called New Landscapes, where the best projects of the class would be part of the exhibition.

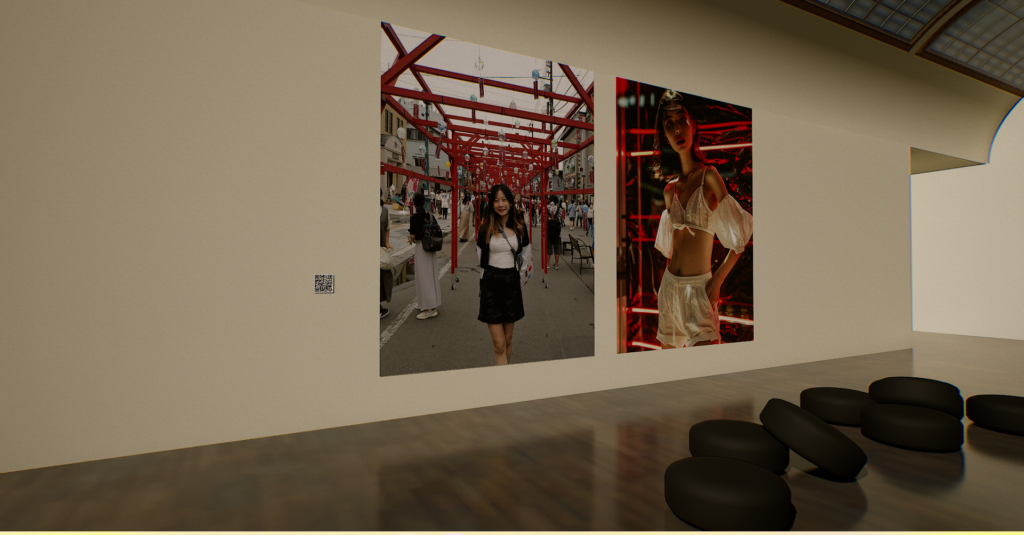

Render of Torino exhibition room

Project Presentation Video

Concept

The adoption of AI continues to rise, transforming industries and enhancing efficiency for businesses and individuals alike. However, it’s crucial to acknowledge that AI inherently carries biases and can perpetuate discrimination. To shed light on these biases, our installation titled ‘Echoes of Exclusion’ utilises imagery to initiate discussion.

Biases, misrepresentations, stereotypes, and discrimination propagated by AI pose significant harm and challenges. Understanding and recognising the limitations of AI-generated imagery and starting conversations about it, enable us to better understand our own current and historical cultural context and enact meaningful change for the future.

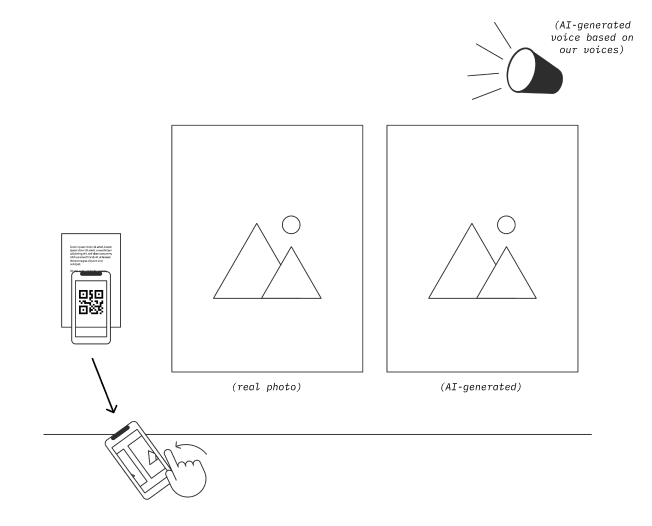

The user experience is designed as follows:

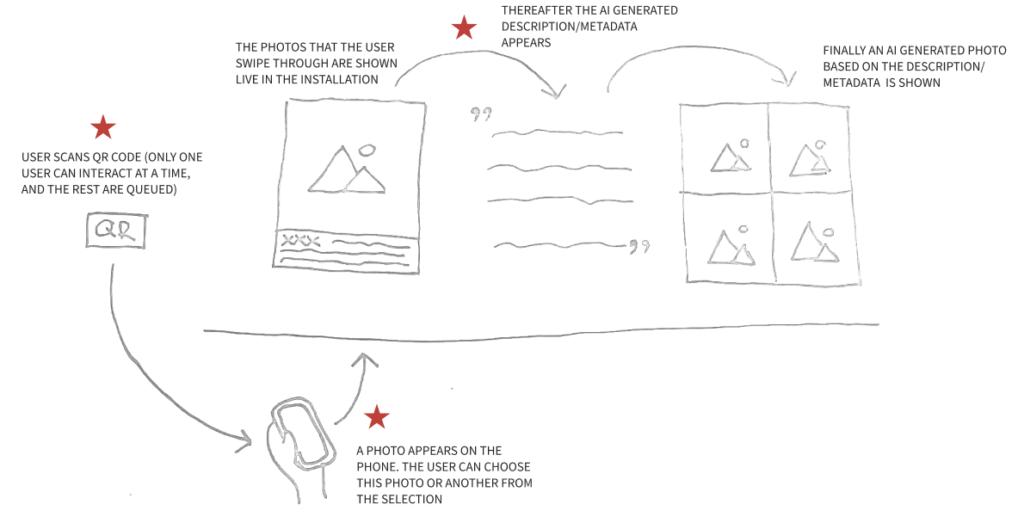

1. Users enter the installation space where they can scan a QR code to start the interaction

2. After scanning the QR code, users can access a selection of 50 curated photos. Only one user can interact with the installation at a time, so other people scanning the QR code will be queued (this feature will be added in the future)

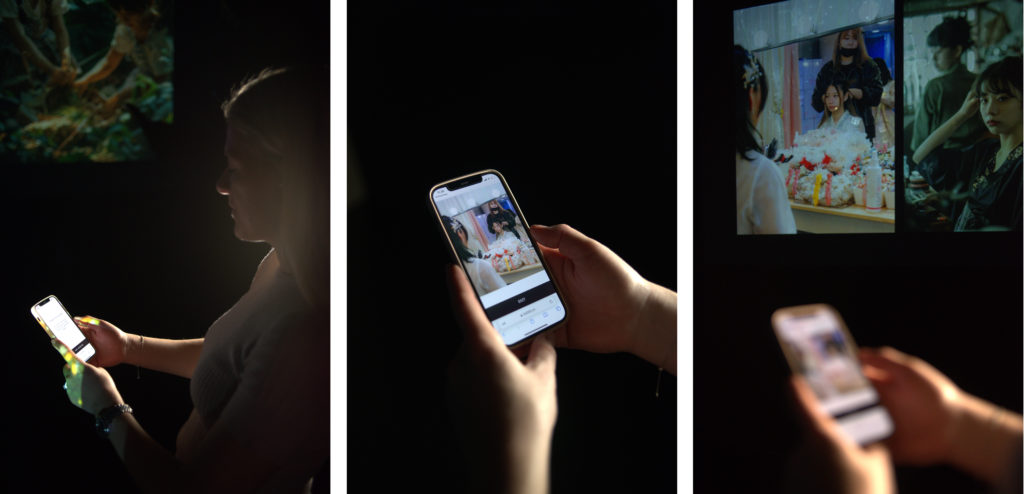

3. The user can swipe through the photos and choose the one they want to develop into an AI-generated image

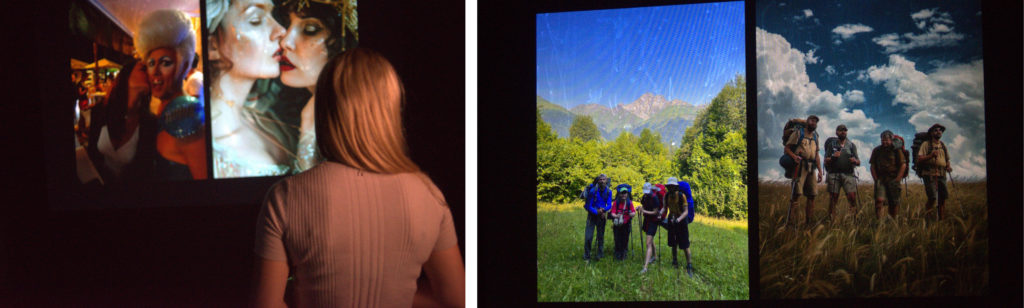

4. As the user swipes, the photos displayed in the installation on the wall will change as well. If the user pauses on a photo, it will be generated into an AI image and the description will be read out loud through a speaker (this voice is AI generated as well using our own voices)

5. When there aren’t any users interacting with the installation, the photos change on their own every 30sec

The installation does not explicitly mention the biases displayed, but it is a conversation starter that allows users to spot these on their own.

Prototyping Phase

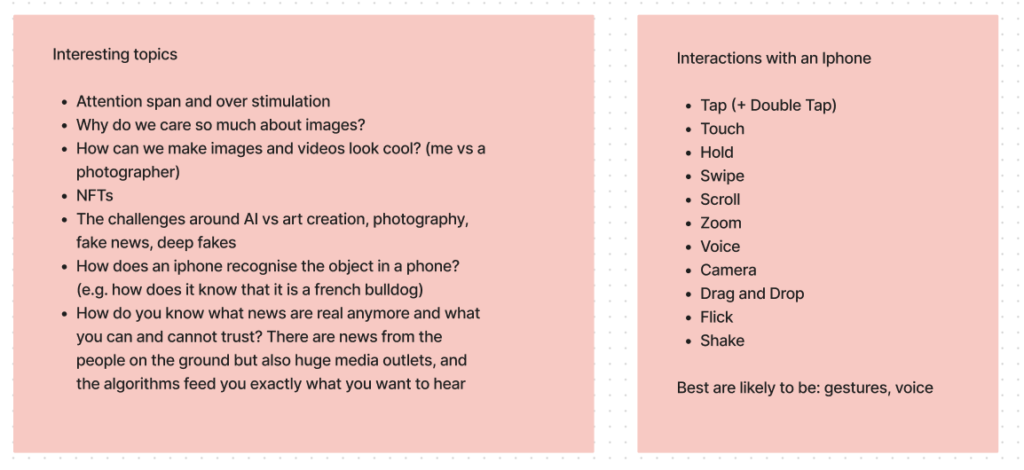

The brief for this project was that we had to create an interactive installation related to contemporary photography. The interaction had to occur through the use of a phone and we were encouraged to consider how we could include the notion of data and metadata in our installation.

We went through a large number of different ideas for the concept, and we particularly liked ideas related to social media and the unveiling of data that is part of imagery. We also researched the different interactions that can take place with the use of a phone’s built-in sensors as well as other case studies of interesting interactive installations.

Since the best projects of the class were going to be exhibited as part of Exposed Photography’s photo festival in Turin in May 2024, we had a number of checkpoints with key SUPSI stakeholders. The process was as follows:

1. We initially presented our research findings to the relevant SUPSI stakeholders;

2. Then we presented 5 ideas with corresponding moodboards, references, sketches, interactions and requirements;

3. Finally we selected an idea and presented the details of it to the stakeholders for early feedback and approval. We created an initial sketch of this idea to clearly explain the initial user journey, and this can be seen in the below photo (where the red stars represent user interactions):

After getting our concept approved, we created a plan for the different activities that we would need to complete over the following 2 weeks. The main activities here were:

1. Undertake further research into biases seen in AI-generated imagery

2. Write the code for the user interaction

3. Curate the photos

Through the research we found a number of different criteria for the types of photos we would like to use for the installation. These criteria were created based on what our research showed as biases that have previously been found in AI-generated imagery (shown in the post-it notes below).

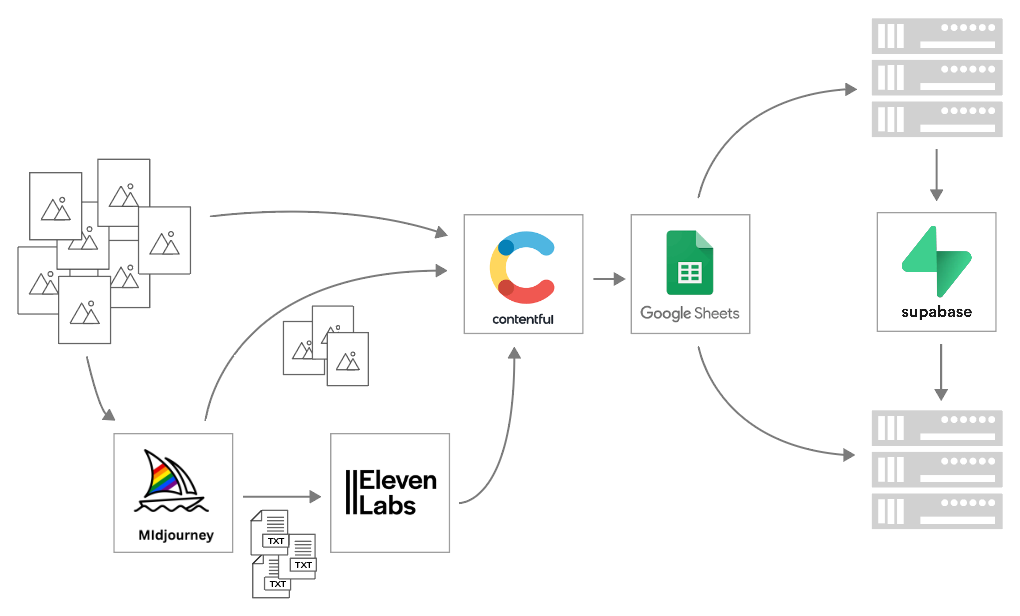

With this information in mind, we reached out to family and friends to collect relevant photos. Once these photos were received, we uploaded them into Midjourney, which provided us with a description, and then we used this description to generate new images.

Midjourney produces 4 descriptions for the uploaded photo and 4 images for each description, so in order for us to be objective, we developed a process for choosing the images. We decided to choose the first description and the first photo generated as long as these were not cartoonish.

We then generated AI voices through ElevenLabs based on our own voices, and we saved audio files with readings of the Midjourney-generated descriptions. The photos and audio files were uploaded to Contentful to turn them into links and then stored in Google Sheets.

Alongside the photo curation we started working on the code. It works as follows:

1. After the user scans the QR code, an entrance screen appears where the user can start the interaction

2. The photo collection appears and the user can slide through these to select the ones they want to interact with. We used Swiper JS to create an interactive slider. The slider sends the index of the current element to Supabase

3. The installation page then receives a request from Supabase in real time and we can therefore see the photos the user swipes through while they interact on their phone

4. After a brief delay, the AI-generated voice will be heard out loud and the AI-generated image will be displayed

Below is a diagram showing the interactions between the different technologies used in the creation of the installation:

Client-Server Application

The frontend part was developed using Javascript. To establish the connection with the photo storage location, we created a database in Google Sheets. The main part of this code is shown below:

JavaSxript

async function fetchData() {

const id = "1Kbu0D1wolVaW5bAxslj9mSDvMUgOtVNZTctC2qp2Ssw";

const gid = "0";

const url = `https://docs.google.com/spreadsheets/d/${id}/gviz/tq?tqx=out:json&tq&gid=${gid}`;

try {

const response = await fetch(url, { mode: "cors" });

const data = await response.text();

const jsonData = JSON.parse(data.substring(47).slice(0, -2));

return jsonData;

} catch (error) {

console.error("Error catch:", error);

return null;

}

}To create the remote connection between two key parts of the installation – the phone server and the installation server – we used real-time functions of Supabase. The code snippet of this function is displayed below:

JavaScript

const key =

"eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJzdXBhYmFzZSIsInJlZiI6Indka3BybHVuYWJ1d2ZmYXh3d3BqIiwicm9sZSI6ImFub24iLCJpYXQiOjE3MDkyMTQwMDgsImV4cCI6MjAyNDc5MDAwOH0.O5MjYF9Z1_sEgS9zfb7-v_clMTp9lwFR_4hSLHhS7qI";

const url = "https://wdkprlunabuwffaxwwpj.supabase.co";

const database = supabase.createClient(url, key);

database

.channel(tableName)

.on(

"postgres_changes",

{ event: "*", schema: "public", table: tableName },

(payload) => {

// main actions with data from Google Database using payload information

}

)

.subscribe();Examples of the Final Result

Below are some examples of the images and descriptions we collected and generated using AI, all of which are part of the final installation:

Rendered mockup of the Echoes of Exclusion installation at the exhibition location in Torino.

References

Cybernetic Forests. (2022). How to Read an AI Image. [online] Available at: https://www.cyberneticforests.com/news/how-to-read-an-ai-image [Accessed 7 Mar. 2024].

Ghili, S. (2021). Can Bias Be Eliminated from Algorithms? [online] Yale Insights. Available at: https://insights.som.yale.edu/insights/can-bias-be-eliminated-from-algorithms.

Heikkilä, M. (2023). These new tools let you see for yourself how biased AI image models are. [online] MIT Technology Review. Available at: https://www.technologyreview.com/2023/03/22/1070167/these-news-tool-let-you-see-for-yourself-how-biased-ai-image-models-are/.

IBM Data and AI Team (2023). Shedding light on AI bias with real world examples. [online] IBM Blog. Available at: https://www.ibm.com/blog/shedding-light-on-ai-bias-with-real-world-examples/.

Manyika, J., Silberg, J. and Presten, B. (2019). What Do We Do About the Biases in AI? [online] Harvard Business Review. Available at: https://hbr.org/2019/10/what-do-we-do-about-the-biases-in-ai.

Milne, S. (2023). AI image generator Stable Diffusion perpetuates racial and gendered stereotypes, study finds. [online] UW News. Available at: https://www.washington.edu/news/2023/11/29/ai-image-generator-stable-diffusion-perpetuates-racial-and-gendered-stereotypes-bias/#:~:text=AI image generator Stable Diffusion perpetuates racial and gendered stereotypes%2C study finds.

NVIDIA. (n.d.). What is Generative AI? [online] Available at: https://www.nvidia.com/en-us/glossary/generative-ai/. skintone.google. (n.d.). Skin Tone Research @ Google. [online] Available at: https://skintone.google/.

Thomas, R.J. and Thomson, T.J. (2023). Ageism, sexism, classism and more: 7 examples of bias in AI-generated images. [online] The Conversation. Available at: https://theconversation.com/ageism-sexism-classism-and-more-7-examples-of-bias-in-ai-generated-images-208748#:~:text=There were also notable differences.

Tiku, N., Schaul, K. and Chen, S.Y. (2023). These Fake Images Reveal How AI Amplifies Our Worst Stereotypes. [online] Washington Post. Available at: https://www.washingtonpost.com/technology/interactive/2023/ai-generated-images-bias-racism-sexism-stereotypes/.

Turk, V. (2023). How AI reduces the world to stereotypes. [online] Rest of World. Available at: https://restofworld.org/2023/ai-image-stereotypes/.