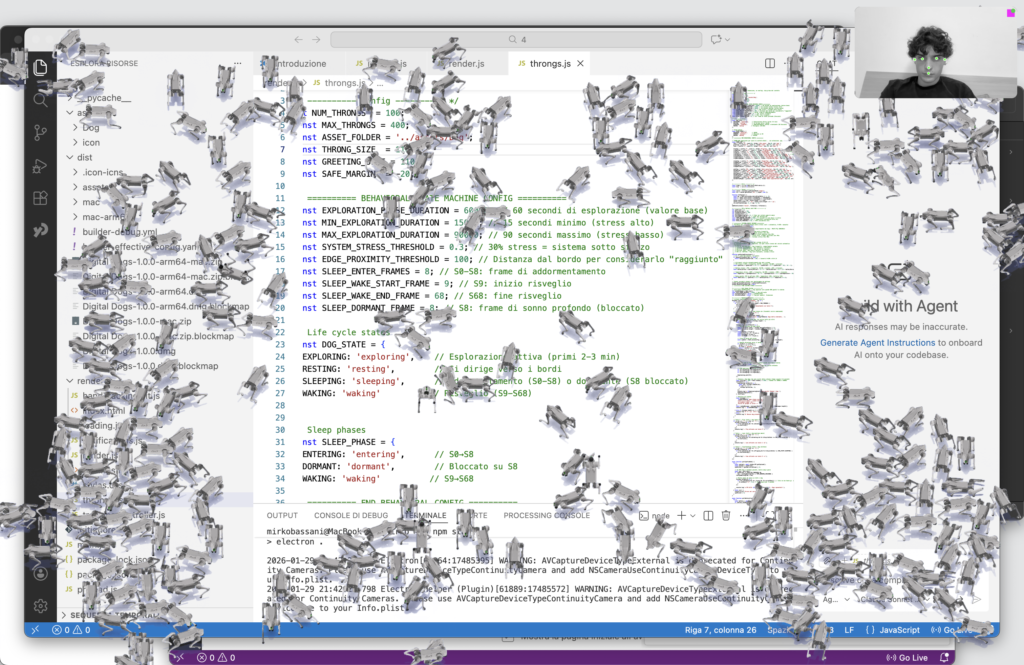

DotDot is a desktop application designed as a small autonomous companion.

It is not a productivity tool or an assistant, but a living presence that quietly inhabits the screen during everyday work.

The creature operates independently, following its own life cycle: moving, exploring, resting, sleeping, and waking without direct commands. Its purpose is not efficiency, but avoiding solitude. Over time, and often without the user noticing, it replicates itself, gradually populating the desktop like a natural process unfolding in the background.

Its behavior is shaped by the conditions of the system it inhabits.

CPU load, battery drain, and overall system pressure are perceived as stress, altering how it moves, rests, and reacts. As stress increases, the creature becomes more restless and alert, as if its environment were turning hostile.

The project exists to make invisible machine states perceptible.

Instead of showing technical data through charts or metrics, system activity is translated into expressive behavior, transforming the desktop from a neutral interface into a shared environment between user, machine, and digital life.